Artificial Intelligence World Summit 2019

‘𝟷st day at 🌍⛰ 🤖intelligence’: the summit through my lens

Cheedar #collaboration #human judgement #explainability #ethical #data ownership #responsibility

🚲-ing early in the morning towards the Amsterdam central station to catch the train to Zaandam, where the ‘World Summit AI’ is held. It’s the Netherlands, where it doesn’t 🌦rain 366 days a year, so it’s not easy to got caught in a downpour and arrive wet at the Summit.Great start of the day 😏

🧙♀️Rebooting AI — building AI we can trust (book)

#deep learning perse != trust

After I registered my badge, I entered in the ‘dark room’ where I heard Gary M., a sceptic towards deep learning professor of Psychology and Neural Science, sharing the following point of view

a computer beating a human in ‘Jeopardy!’ doesn’t mean that we are in the doorstep of intelligent machines.

Why not? Because the world of games is much different than the real world. Games have fixed rules that don’t change. Later on he mentions a discussion he had with the deep learning pioneer, Yann LeCun, (you can read more about it here) and that

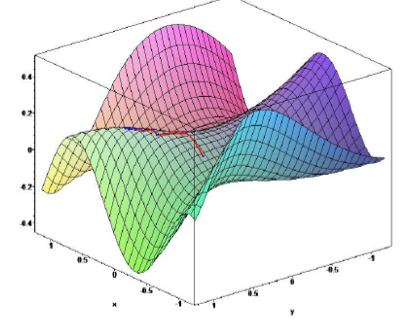

gradient descent based symbolic artificial intelligence or differently said a reconciliation between symbolic and connectionist paradigm is needed to endow the machines with reasoning and causality learning capabilities*.

Let’s imagine now that we have succeed in developing human-level intelligent machines that can operate in our complex and open ended world.

what consequences would have the human level AI for society?

That was one of the critical questions that Garry posed to the audience and that he discusses it deeply together with Ernest Davis in their book. The take away is that we need a model of the world and not only a model of statistics to our quest for human level AI.

🌊 AI as a large scale system environment- scalability and stability

#AI infra challenges = with any other infra!

Imagine the nightmare of having just one machine to test or deploy your model and just crashes! This wouldn't happen with a cluster of machines which additionally allows for an efficient usage of computational resources

Fernanda W., a cyberfeminism and engineer at Facebook talks about the different roles and objectives in a company that can cause conflicts & thereby extra hurdles in the deployment of the final product-service:

- M.L. engineers want to start and finish fast

- Infra engineers want good fleet utilization and efficient jobs (e.g., database maintenance, I/O)

- Product engineers want better models to improve the features (e.g., increase user engagement)

- Nobody wants outages and regressions

In the part of scheduling jobs and cluster management, be careful with the allocation of resources and the network usage; not only monitor the job behavior but also the human behavior (e.g., someone releases 100 jobs at once).

In the part of data management, do proper attribution of data ownership. Imagine how painful can be having terabytes of data that no one uses or needs them and are never deleted! Who owns the data? Who needs them?

In the part of test and release, where the A/B testing has a lot of problems, achieve the result you desire to achieve but without breaking something else.

Claim your working environment as your own environment. Make infrastructure engineers your friends, they had the same problems before. Look at the similarities instead of the differences

🔐 ‘The ever growing intersection of blockchain and AI’

# AI of tomorrow = decentralized

How most apps are built today? 3 things:

- Logic: a mix of client & server

- Data: centralized

- Identity: mail, social etc

And as users we trust that apps won’t transmit malicious apps and that our data won’t used without our permission.

Diwaker G. is the head of Engineering in Blockstack, a decentralized app ecosystem that puts people into control of their data via blockchain techs. 2 of blockchains’ key properties are the enhanced security and the decentralization. But what is a decentralized app? An app where users choose where their data can walk.

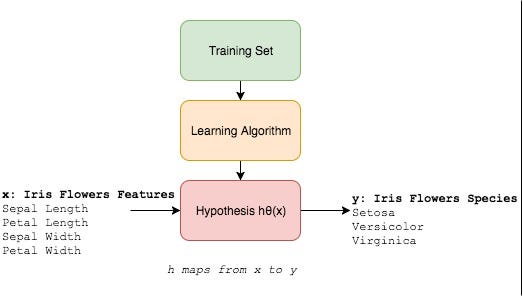

What this has to do with AI? Training and accessing ML models means that you have the necessary resources such as a model, data, and infrastructure. He introduced the new framework proposed by Microsoft, which makes ML accessible to everyone, by using the resources they already have such as browsers and apps.

Democratized ML via blockchain = users control data, limited expertise & resources

🚯‘What makes humans also AI irResponsible: How to inject your AI with R?

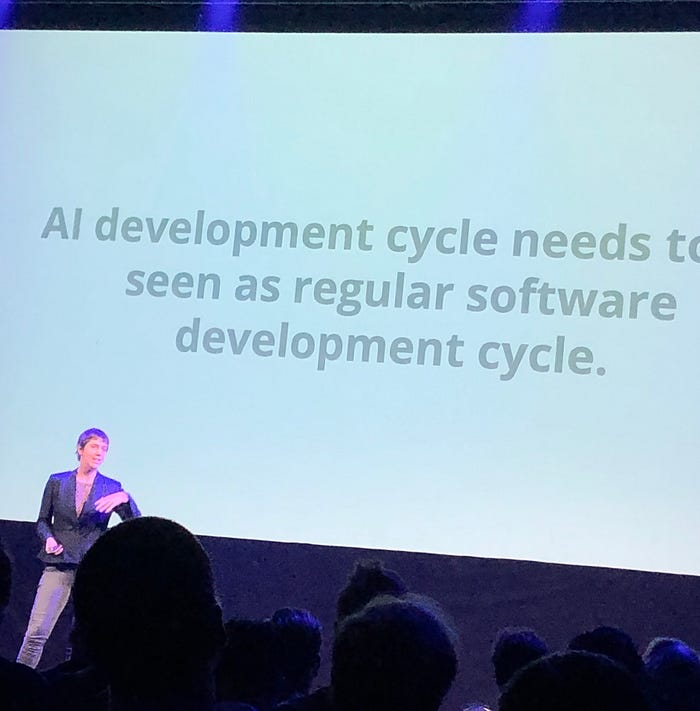

# Guidelines in software engineering should be the same in= AI engineering

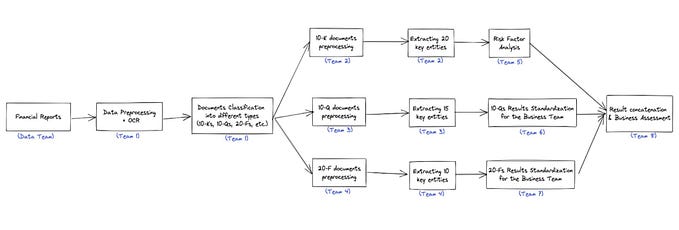

How to inject R in the AI product? Dagmar M. talks that the first step of an AI system is to define what the system should do. The requirements in software engineering are exactly developed for that reason. So, once again AI process isn’t so different than any other software. You come up with what you want to implement and ethical requirements should have the most priority.

Accountability & Awareness! Let’s say you have built a chatbot or a conversational system or another AI app that users interact with, did you make explicit that they are interacting with a non-human agent? Users should be aware of that. Additionally, when something gets wrong who is responsible?

Cognitive Bias! Imagine how difficult for the computer is to detect cognitive biases, which are … everywhere. Confirmation bias is about favoring information that confirms your existing beliefs. Anchoring or focalism is about depending heavily on the initial piece of information offered to you when making decisions. Then, how do we know that definitions and arguments are valid? Neil de Grasse Tyson was discussing in one of his podcast series ‘Star Talk’ was discussing that cognitive bias is so important for our quest of truth that a whole course should be taught in school students.

Fairness! Are the data diverse and representative or they reflect only a specific part of the population. And then have you tested for any problematic use cases?

Clearly define the purpose of the system. Responsible AI needs responsible humans.

🧐🕴 Panel session: the impact of AI on the city

# Cooperating to defend citizens’ rights in an AI empowered city

Profs Sander Klous, Marc Salomon, Ger Baron, Meeri Haataja, and Hans Bos agree that citizens should benefit from the new technology; and discuss the impact of AI on them by arguing on the below provoking statements

AI allows for more objective decision making.?

‘Yes, but only if you combine it with a more human centric approach.

Politics are inherently subjective and everything, including an algorithm has politics.

But how to make technology more honest? Firstly, for every rule we make, we discuss about it and ensure that it is ethical; and secondly, the design should be transparent, applicable, and replicable.

What is the success of AI in the city? It’s always in a way subjective and I hope that we will make it more objective. This is linked to the way WE implement it. Put effort to decisions and check for potential biases. We should ask why we are making this decision and why we are developing this specific tech product. The types of efforts that could make an objective AI are: transparency, variety in data, understanding on how the algorithms are developed, and how we work. These are all in a principle level and transparency comes up to show us how we indeed make them practice. In this process, we make sure that customers/citizens understand how the platform that they are using works.

On the other extreme, we can claim that if we don’t know how people think then why should we know how algorithm ‘thinks’?

And the next question that arises is that if we can’t explain an algorithm, implies that we can’t use it? The answer depends on what domain is the algorithm applied (e.g., healthcare), what decision is making (e.g., cancer detection), and what is its specific purpose. We have to put much effort on that and acquire a deep understanding of the algorithm, e.g., maybe designing a global certification of algorithms. Theses would have clearly defined criteria and evidence! Not much research has been done and thought about this.

We need security by design, explainability by design and research oriented towards understanding algorithms

AI leads to greater societal inequality.?

In transportation industry, self driving cars will introduce a new form of congestion which will affect people that can’t afford an autonomous vehicle. In another domain like healthcare, AI could drive down costs and so it’d become accessible for more people. So, it’s debatable and it depends on the specific use case.

We should accept that no tech is neutral; it’s an instrument that how is being used and should be used will determine the effects on equality concerns.

We should pay attention at this concern and act smart; especially over the years. On the other hand, we don’t own resources such as data, expertise and and algorithms such as Microsoft. Check who owns the data.

We haven’t yet thought this as a society. Now is the time to ask not what a computer can do; but rather whether and under what circumstances we’d allow it to do it.

AI leads to more privatization of public services.?

A huge challenge is to understand human relationships & incentives.

How do we use public power? I haven’t seen public authorities with massive data science teams. We see our top students go to google and other big tech and few go to the municipality. Firstly because they offer much better salaries and secondly they offer more opportunities for personal development. For us is democracy, we should collaborate with PhDs and we should understand the society and invest in the city.

❤️🧠Panel discussion: Developing human centered AI through multidisciplinary collaboration

# Human judgement taken from a big variety of human-related disciplines.

Ron Chrisley, Joris van Hoboken, Karen Croxson, Catelijne Muller and Anja Kaspersen discuss that we ought to built AI systems that augment people and these systems must utilize what we know about humans. Because the impact on privacy, sustainability, trust, legal consequence, resilience worries us.

If we don’t understand how humans work then how can we build a system that will augment that aspect?

We need human level judgement so as to help humans to make better judgements. How do you see AI supporting humans and what are the risks? Combine behavioral science with data science is again going to raise risks. It gives us unique lens on markets and thus we hence can identify vulnerability, the interests of a group of people, who/what is against whom interests, and then give guidance to firms.

When you are more predictable you are more exploitable

What we develop we should use it in a responsible manner. High impact AI is face recognition, so ask what it the most important element of responsibility here? Autonomy? technical robustness?or transparency or privacy or fairness? Regulate them in a proper way, when do we use it, why do we use, do we need any specific regulation? Regulation is coming. Ethics guidelines are out there and we should find expert people to talk about these in a high level discussion.

Learn how to ask questions! Human in command approach: why to develop the system? Is it scientifically proven that this app can detect my emotions?

Changing behaviors and integrity? We should utilize knowledge from sociology because how people interact is important. The same applies to ethics philosophy from one hand and law in the other. In Europe we focus mostly on legal requirements. How do you bring the right expertise and how do you bring it to policy makers? Judgement. Human judgement is essential is healthcare which is an obvious domain that requires human sensitivity.

Augmenting humans with interaction.

🛰 AI for space exploration

Andy T. shared with us possible uses of AI, and especially deep reinforcement learning, for space exploration, guidance and control. A few of them include:

- Spacecraft: navigation, find the best path,

- Autonomous exploration: how to communicate with the spacecraft ‘hey take a closer look look at this star please’

- Processing data & data compression: spacecraft collect data and transmit back the collected data by the spacecraft where the real processing will be done.

- Novelty detection: what’s different in the terrain/space etc. then what we saw before

- Simulation: what it would be like if we colonize the galaxy

- Design robots to explore more complex terrain

- Dataset generation for tasks like ‘I’m on earth how to go to Venus orbit? What is the optimal path?’

Policy learning, value function learning and neural networks are among the most suitable methods for the above tasks

😷🤒 NLP-inspired models for comorbidity networks in health care

# causal inferences with limited data

Comorbidity is when two diseases arise at the same or near the same time. Is there any causal or conditional independence relationship? Think from the data perspective rather than the medical research; and then link it to medical research. Tarek N. speaks on that by using a neural net architecture.

Imagine the following scenario. The data includes basic demographic details, diagnosis and the medical service performed. The problem is that you have access to a limited medical history. Each patient is represented as a 10-dim. binary vector z_d indicating the presence or absence of a certain medical condition within a year (so the cardinality is gigantic).

Then you randomly sample n conditions from the history aiming to estimate P(z_1,…,z_d| x_1,..x_d, age, gender) (i.e., given this history what is the probability of observing that history?) and you try different conditions (conditions = distance between two vectors ).

The model architecture includes two components: the encoder and the probability expander. The former outputs the data embedding while the probability expander outputs the context conditions.

The word that I kept in my mind from the day (so as to remember what to write) is ‘Cheedar’ that comes from cheddar but pronounced in a 🇳🇱 way and stands for: Collaboration, Human judgement, Explainability, Ethical, DAta ownership, Responsibility. There were many other interesting talks such as edge computing etc. that I didn’t write about.

I hope I’ll write soon about my experiences from the 2nd day.

Until then happy life with or without intelligent 🤖surrounding you.